People need a framework or benchmark to compare against. Coding skills may be evaluated through time and space complexity of the code that solves a problem, the time required to write them, the application of good practices, and so on. When it comes to management though, soft skills are harder to evaluate, and many times there is a lack of reference and previous experiences on similar scenarios.

The higher you sit in management, the more abstract the concepts become, and the less framework you have to self-evaluate. What to do when there is no reference point to validate your decisions and projections?

Knowledge classification

It may be lengthy, but you can state what you know and what you don’t.

Learning processes use comparisons to build and modify our understanding. When the outside world does not perfectly match an already existing inner representation, the difference must be explained. This increases the “what I know” set, or at least rectifies a misunderstanding and rises self-confidence. If the individual can not —or is not interested in— resolve the aforementioned difference, a new element can be added to the “what I don’t know” set.

Now you may be thinking: Wait! You are forgetting about the unknown that I am not even aware of. Personally, I am a hunter of the unaware-unknown, to either learn about it or at least append some elements to the list of what I still don’t know. Hopefully, I can skim the subject and consciously rate how attracted I am to deepen about it in the future. One day I realized that there is a huge amount of Linux distributions, but I am not attracted to understand what motivated the forks or each project genesis (although some are really curious).

Metrics and comparisons

When it comes to measuring, many different aspects can be tracked and computed. Tasks can be weighted by broadness, effort points, expected time to be completed, among other strategies; people and project status may be evaluated regarding the ratio of assigned versus fulfilled tasks, and so on.

This post is not focused on metrics, but it’s important to understand that elements belonging to the unaware-unknown set may be patiently waiting for us just around the corner. I haven’t made research about, but whether this set is empty seems to be not computable. Thus, every project manager must assume that odds are there will be unforeseen obstacles.

Even when a project has a backlog of defined tasks, one should pay attention as to why a new task is added. Poorly defined road maps, as well as product owner hesitation, may lead to a big deal of tasks detouring the advance. Task-related metrics may picture a good pace and an efficient team, but the project can wander until resources burn out.

Aside from formal and statistical evaluation, each person may compare himself against others and against own expectations of himself as well. The later may be based on previous performance and a prediction given the characteristics of the task in hands. This personal self-evaluation is useful if conducted for the sake of improving; it is biased and more subjective than not.

Bulletproof management

[At the cost of breaking the DRY principle, I must repeat it:] Personally, I am a hunter of the unaware-unknown because it is a great source of uncertainty, which in turn must be addressed and mitigated. Even then, there is no such thing as assured success or bulletproof management.

The thing is, how does a manager lessen the odds of bad assumptions and mistakes penetrating too deep into the backlog of tasks and, even more, compromising the long ago done tasks? Some strategies are research, mockups, sketches, tests, minimum viable solution pilots, and so on.

Even experienced managers should constantly keep an eye on estimations, and consider if previously made assumptions need to be revisited.

How much is enough? New technologies and libraries are born every day, there is no gain in considering them all unless the one left out would have a stellar value in the project. Even though, one must state when the research was enough and go on to the next phase. There are many other facets of a project that are subjected by the “how much” dilemma.

Are the aims high enough? Expectations of different actors must be materialized into concrete features and objectives. Even though people are affected by daily changing mood and confidence, and the materialization of their expectations may vary even unnoticeably. Aiming too high may hurt the project leading even to never proceed to the second stage. Aiming too low may lead the project to an early end because of a lack of interest.

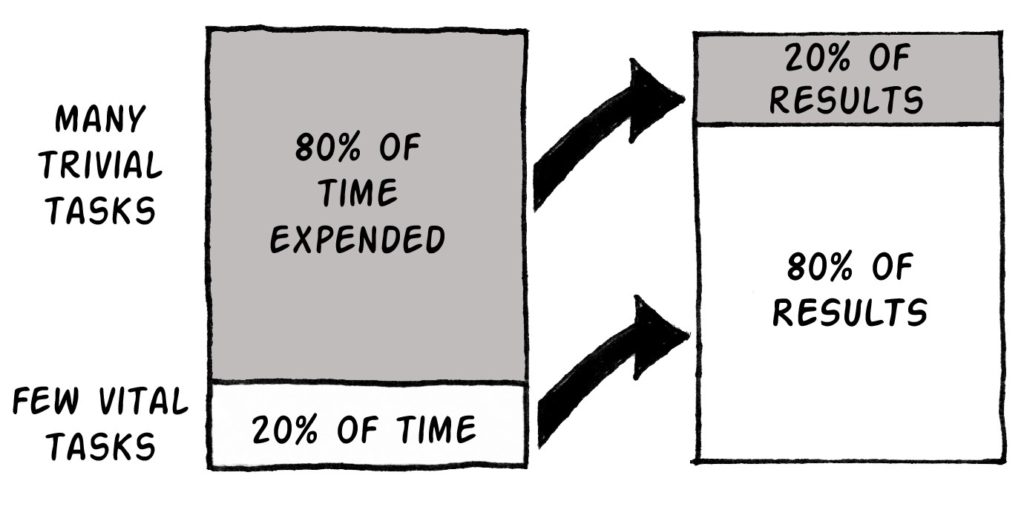

Is the reached quality enough? I invoke the Pareto 80/20 rule, shall I say more? There are other handy concepts like the 90-90 rule, but the bottom-line is: Accept a good and complete enough solution and move on.

Without trying to introduce recursion, hints on what is enough are needed.

So, what to do?

Each person, no matter the roles he plays, should look after:

- keeping a reference framework to compare against,

- manage the list of unknowns,

- actively hunt for the unaware-unknown, and

- self-evaluate aside from formal metrics the project may use.

If you find it difficult to create a framework of reference, then you can add a new task to your backlog, because is mandatory to have one. Be creative, and here is a hint: try to relate to people in a similar situation, you may give a bit of advice that is also useful for yourself.

1 thought on “Based on my opinion, I am doing great!”